Tired of relying on the cloud? I tested 20+ local LLMs on my machine to find the best coding assistant for Python. The results were surprising.

The Allure of Local AI

There’s something incredibly powerful about running an AI model directly on your own machine. No latency, no privacy concerns, no subscription fees—just raw, uninterrupted coding power. As a developer who lives in Python, I’ve been on a quest to find the perfect local coding companion. I didn’t want a jack-of-all-trades; I wanted a master of one: code generation and explanation.

My testing rig isn’t a supercomputer, but a capable workstation. This makes the results perfect for other developers with similarly powerful setups.

The Contenders: A Gladiator Arena of Models

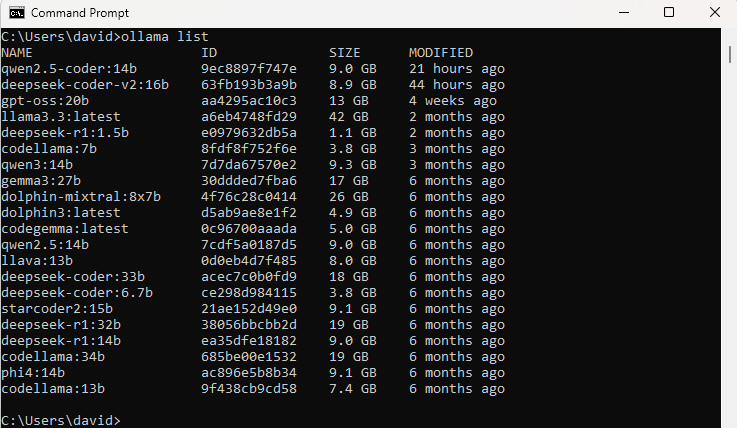

I pulled down over twenty of the most promising open-weight models available today. The arena included everything from compact 1.5B parameter models to the behemoth 70B Llama 3.3. Here’s a snapshot of the key players:

- The Giants:

llama3.3:70b(42GB),dolphin-mixtral:8x7b(26GB),gemma3:27b(17GB) - The Specialized Coders:

deepseek-coder:33b,codellama:34b,starcoder2:15b,qwen2.5-coder:14b - The New Challengers:

deepseek-coder-v2:16b,gpt-oss:20b,qwen3:14b - The Efficient Lightweights:

deepseek-r1:1.5b,phi4:14b,codellama:7b

The goal was simple: which model provides the most accurate, context-aware, and intelligent Python code assistance while running efficiently on local hardware?

The Testing Gauntlet

I put each model through a series of challenges designed to reflect real-world Python development:

- Algorithm Implementation: “Write a Python function to find the longest palindromic substring in a given string.”

- Library Usage (Pandas): “Create a DataFrame from this sample data and then group by column X to get the average of Y.”

- Bug Fixing & Explanation: “Here’s a snippet of code with a subtle bug. What is it and how do you fix it?”

- Code Explanation: “Explain this dense regex pattern and the following list comprehension.”

- API Design: “Draft a FastAPI endpoint for a user login.”

I evaluated them on accuracy, reasoning clarity, code quality (Pythonic-ness), and speed of response.

And the Winner Is… DeepSeek-Coder-V2:16b

After extensive testing, one model consistently rose to the top: deepseek-coder-v2:16b.

It wasn’t the biggest model I tested, but it was, without a doubt, the most effective for my Python workflow. Here’s why it won me over:

1. Striking the Perfect Balance: At 16 billion parameters and 8.9GB, it hits a incredible sweet spot. It’s large enough to be profoundly capable but small enough to run quickly and responsively on a consumer-grade GPU with 16-24GB of VRAM or even efficiently on CPU.

2. Exceptional Python Proficiency: Its code wasn’t just correct; it was idiomatic. It used Pythonic constructs like list comprehensions, context managers (with statements), and elegant libraries like pathlib where appropriate. It felt like pairing with a senior developer.

3. Superb Reasoning and Context: When I asked it to explain its code or a complex concept, its responses were clear, structured, and insightful. It didn’t just give an answer; it taught me. Its ability to understand the intent behind my queries was a notch above most other models.

4. Performance per Gigabyte: This is its killer feature. The deepseek-coder-v2:16b delivers performance that rivals or even surpasses models twice its size (like the original 33B version). The efficiency gains from its new architecture are immediately apparent.

How It Stacks Up Against the Competition

- vs. Larger Models (Llama3.3:70b, Codellama:34b): While these giants are incredibly powerful, their massive size (19-42GB) makes them slower and often overkill for everyday coding tasks. The marginal gain in quality didn’t justify the significant hit to responsiveness for me.

- vs. Other “Coder” Models (OG DeepSeek-Coder, CodeLlama): The V2 version is a clear evolution. It’s more conversational, makes fewer subtle mistakes, and has a better understanding of complex instructions than its predecessors.

- vs. Lightweights (Phi4, DeepSeek-R1): The smaller models are blazing fast but often struggle with complexity. They are great for snippets but can fail on larger, more abstract tasks where

deepseek-coder-v2excels.

My Verdict and Recommendation

If you are a Python developer looking for a single, best-in-class local AI assistant to integrate into your daily workflow, deepseek-coder-v2:16b is, in my opinion, the current champion. The next best option is codellama:13b.

Deepseek-coder-v2:16b offers an almost perfect blend of capability, speed, and efficiency. It has become an indispensable tool in my toolkit for everything from generating boilerplate code and debugging to explaining new concepts.

Ready to try it yourself? It’s incredibly easy to get started:

bash

ollama pull deepseek-coder-v2:16b ollama run deepseek-coder-v2:16b

I’d love to hear about your experiences. Have you found a different model that works better for your use case? Let me know in the comments!